#include <Scaler.h>

Public Member Functions | |

| StandardScaler (bool withMeans=true, bool withStd=true) | |

| void | fit (const Matrix< double > &X, const Matrix< double > &y) override |

| Matrix< double > | transform (const Matrix< double > &in) override |

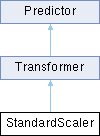

Public Member Functions inherited from Transformer Public Member Functions inherited from Transformer | |

| Matrix< double > | predict (const Matrix< double > &in) override |

| virtual void | fit (const Matrix< double > &X, const Matrix< double > &y)=0 |

| virtual Matrix< double > | predict (const Matrix< double > &)=0 |

| virtual Matrix< double > | transform (const Matrix< double > &)=0 |

Public Attributes | |

| Matrix< double > | means |

| Matrix< double > | std_deviations |

Private Attributes | |

| bool | with_std = true |

| bool | with_means = true |

Detailed Description

Standarize features by removing the mean and scaling to unit variance.

The standard score of a sample $x\inX$ with $X\in\mathbf{R}^{N\times M}$ is calculated as $$ \tilde{x} = \frac{x - \mu}{\sigma} $$ with

- $\mu\in\mathbf{R}^M$ the mean of the training samples

- $\sigma\in\mathbf{R}^M$ the standard deviation of the training samples

Note: Standardization of a data set is a common requirement for many ML estimators: They might behave badly if the individual featrues do not more or less look like standard normally distributed data.

For instance many elements used in the objective funtion of a learning algorithm assume that all features are centered around 0 and have variance in the same order. If a feature has a variance that is orders of maginiteds larger than others, it might dominate the objective function and make the estimator unable to learn from other feaztures correctly as expected.[^1]

- Examples

- ds/preprocessing/TestScaler.cpp.

Constructor & Destructor Documentation

◆ StandardScaler()

|

inline |

Member Function Documentation

◆ fit()

|

inlineoverridevirtual |

Computes mean and sds to be used later in scaling.

- Parameters

-

X given matrix to use to calculate mean/standard deviations y unused

Implements Predictor.

◆ transform()

Perform standarization by centering and scaling

- To achieve center at 0 and unit variance.

- Parameters

-

in Data to transform

- Returns

- : transformed data

Implements Predictor.

Member Data Documentation

◆ means

| Matrix<double> StandardScaler::means |

◆ std_deviations

| Matrix<double> StandardScaler::std_deviations |

◆ with_means

|

private |

◆ with_std

|

private |

The documentation for this class was generated from the following file:

- include/math/ds/preprocessing/Scaler.h